Last week I put out the Black Swan In The Enterprise, which argued that most uptime planning comes from the ability to predict the future — and sometimes, the future is wildly different that you would have predicted. Things fall apart; the centre does not hold — and they fall apart very differently than what the team planned for up front. If that’s the case, what’s the company to do, and how can Operating Systems promise those 99.995% uptimes and take themselves seriously?

Last week I put out the Black Swan In The Enterprise, which argued that most uptime planning comes from the ability to predict the future — and sometimes, the future is wildly different that you would have predicted. Things fall apart; the centre does not hold — and they fall apart very differently than what the team planned for up front. If that’s the case, what’s the company to do, and how can Operating Systems promise those 99.995% uptimes and take themselves seriously?

System Effects

The incredibly positive uptimes we see in sales and marketing material all come from the same place — looking at one component of the project in isolation. For example, let’s look at that Amazon Outage again. The severs lost power. This has nothing to do with the operating system, it’s not the fault of the operating system! You can almost hear the executives arguing “look, man, I said 99.995% uptime, but you can’t get that if you yank the power cord out.”

Or yank the internet connection.

Or a bridge or router fails.

Or a sysadmin makes a mistake an mis-configures a router table.

Or a sysadmin bingos a firewall rule, and suddenly your webserver is prevented to talking to the internet.

Or the loadbalancer gets bingoes, pointing to an old server that is no longer there.

Or …

The problem isn’t an individual component — it is all the components, acting as a system. Just like the old cliche, the delivery chain of software is only as strong as its weakest link. My suggestion on what to do with this is more than a little bit counter-intuitive.

A Surprising Discovery

Above I talked about computers as a system, but systems are more than computers. Get ten human beings together to build software, and you have a different kind of system. Go to the doctor with a problem breathing, and you’ll discover a different kind of system — the human body. It’s the body I will start with.

The human body is fascinating as a system because it is so amazingly redundant – two ears, two lungs, two nostrils – two ways of breathing air for goodness sake (nose and mouth).

To an ‘efficiency expert’, the human body might look like an over-populated department, full of fat, ready to be sliced. Why, out of one person you could practically make two!

Except it turns out that redundancy is the reason the human body works.

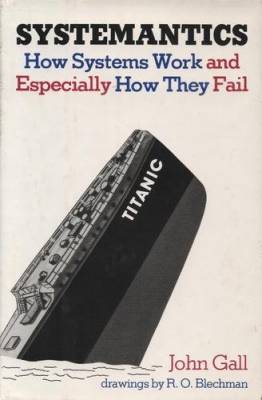

John Gall, a medical doctor, noticed this in his own research, and realized that it applies to more than human. He went on to write Systemantics; How Systems Work, and Especially How They Fail. His first, most basic insight: In any system that has developed in an evolutionary way, some component is failing and being compensated for that failure by some other component. All. The. Time.

So if you’ve ever worked in an organization that grew up organically, and felt that the middle managers were bailing out the executives, or the project managers doing the work for the analysts, or the testers existed to ‘save the bacon’ of the programmers — well, you were right. That was exactly what was happening, and as it should be. That is simply what happens in systems that evolve.

In humans, when you have a cold and can not breathe through the nose, you can breathe through your mouth. Sure, it’s uncouth, but if you do this, you don’t die. It’s kind of important. The massive redundancy in the human body means a broken arm does not mean death — and the number one cause of death, heart failure, is ironically the one element of the system that is singular. Perhaps that is not ironic at all.

Gall does talk about a second category of work — systems designed in “Big Bang” Style, instead of evolutionary. For example, the US Space Shuttle System was a reusable launch vehicle, designed to land safely back on earth. The space shuttle was a radical departure from the Apollo and Gemini projects that had been prior to it, and designed to begin working immediately on the first mission. Planned for a 10,000-to-one failure rate, the Shuttle’s actual failure rate was more like 100-to-1. After over thirty years, the shuttle program was quietly closed in 2012. We can talk more about the shuttle program in a future post, but for now, it’s enough to know that the design style for that system was revolutionary, not evolutionary, that revolutionary system changes are likely to fail, while evolutionary designs tend to have lots of tiny failures that the overall system compensates for.

Back In The Enterprise

That means that instead of trying to rewrite a perfect system that never fails, I’m more likely to plan for many failure points, to plan for a second system to catch, correct, or compensate for the error. This can include humans. If the systems fail entirely, but a monitoring system (or a human) corrects the error in seconds, we’ll be a whole lot closer to that 99.95% uptime than if the same failure happens, but we don’t do anything about it until customer phone calls eventually get escalated to someone that matters, and then he starts acting as incident commander, calling technical staff until he finds someone who does not say “not my problem” or “call me Monday.”

There are dozens of tricks to do this – Creating runbooks to prepare for failures when they happen, strong monitoring, planning for failover, good emergency cutover procedures, and building machines with built-in redundancy. These go all the way down to the hard drive and server component level — more about that next time.

The bottom line – by planning for systems to fail, and having backups and compensation for that failure, yes, we can improve outcomes. It’s going to be a lot of work.

More to come.